With the continuing advance of technology and an ever-growing amount of personal data on the Internet, the number of cyber attacks has steadily been increasing over the years. The Republic of South Africa, as a major emerging national economy, is no stranger to cyber attacks—ranging from simple spyware attacks to data breaches affecting millions of people.

Together, we delve into the 5 worst data breaches in South African history, ranked by BreachLevelIndex.com.

#5 – Government Communication and Information System (GCIS)

In a now-infamous attack on African governments in 2016 by the Anonymous collective, GCIS was targeted and lost 33,000 records to the attack.

The GCIS is a South African government department whose primary task is to manage communication with the public about government actions and policies.

How it happened

Vermeulen (2016A) reports that the hackers managed to access an old GCIS portal that is no longer used by most GCIS members. They also report that the leaked information is outdated (Vermeulen, 2016A).

What they lost

There were a total of 33,000 records that were obtained by Anonymous but only the details of government employees – which numbered 1,500 – were made public.

Names, phone numbers, email addresses, and hashed passwords of around 1,500 government employees (Breach Level Index, 2017; Business Tech, 2017; Polity, 2013).

#4 – Traffic Fine Website ViewFines

Business Tech and Fin24 reported that nearly 1 million personal records of South Africans were publicly exposed in this large leak. This happened on 23 May 2018.

Close to a million (934,000) personal records of South Africans have reportedly been publicly exposed online, following what appears to have been a governmental leak.

How it happened

The specifics are unknown but the breach was once again made public by HaveIBeenPwned.com.

What they lost

Names, identity numbers and email addresses of South African drivers stored on the ViewFines website in plain text.

#3 – Ster Kinekor

This breach is what was and is still South Africa’s largest recorded data breach ever and was at one point the 20th worst breach in the world (June 2017) but has now fallen to 115th (19 March 2019).

More than 1.6 million accounts were leaked in total. This includes my account details, actually — and I did not even know about this until months after it happened!

How it happened

A hacker managed to exploit an enumeration vulnerability (a process whereby hackers can find out an account’s username from the feedback a site gives them) on Ster Kinekor’s old website to gain access to the database records. Ster Kinekor have a new website now and the vulnerability is no longer present.

What they lost

According to HaveIBeenPwned.com — which you should check out, by the way, as it lets you know if your personal information has been leaked into the internet — the compromised data includes: dates of birth, email addresses, genders, names, passwords, phone numbers, physical addresses and languages spoken.

#2 – The South African Government (and Others)

Approximately 30 million records were leaked. Reported by multiples sources once more, it appears as if the most recent data contained in the breach came from the deeds office as the file containing the data was titled: “masterdeeds.” It is difficult to get a time frame for this breach as the file appears to contain data from multiple sources. The file was last modified in March 2017 which is an indicator of when the most recent breach took place but there is data in the file that dates back to the early 1990s.

How it happened

Due to the nature of the contents of the file (containing many different types of data from many potential sources) it is difficult to determine how it happened. It should be noted, however, that one can query data from DeedsWeb — the website of The Department of Rural Development and Land Reform.

What they lost

Addresses, income, living standard measure, contact information, employment status and title deed information.

#1 – Jigsaw Holdings

Reported by multiple sources including Compliance Online, Fin24 and Tech Central, this is the largest data breach in South African history and is ranked the 8th biggest leak in the world as of 19 March 2019. A total of 75 million records were lost, of which 65 million were South African!

“Who are Jigsaw Holdings and why do they have so much data?” I hear you ask. Jigsaw are a holding company for large real estate agencies such as Aida, Realty1 and ERA. It is likely that they used this data for the real estate agents to vet potential clients. Whether or not such a company should even be allowed to store this quantity of personal information is something that should hopefully be managed in the POPI act. You can read about the act here.

How it happened

The information was easily accessible on an open web server. What this means is that the data was simply lying out in the open. No hacking was even required to get to it. Login credentials were apparently displayed in error messages on another site. The same credentials were then used everywhere and gave you full administrator access to every database on the server! It gets better. All the personal data was contained in a single database in plain text. The lack of security displayed here when you are responsible for such a large amount of personal information is truly astounding.

What they lost

Information ranging from ID numbers to company directorships. This opens up many possibilities for identity theft, and if your information has been leaked you need to be very vigilant.

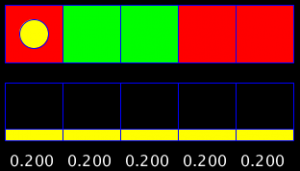

Here is a quick overview of the aforementioned data breaches:

| Rank |

Organisation Breached |

Records Breached |

| 8 |

Jigsaw Holdings |

65,000,000 |

| 16 |

South African Government |

30,000,000 |

| 91 |

ViewFines |

934,000 |

| 115 |

Ster Kinekor |

1,600,000 |

| 333 |

Government Communication and Information System (GCIS) |

33,000 |

Do organisations report data breaches?

Of the five organisations involved in this research, Ster Kinekor made no statements regarding their data breach. Multiple news outlets attempted to contact them regarding the issue and none of them received a response. The two government agencies that were breached (GCIS and South Africa’s Department of Water Affairs) were breached by hacktivists from the Anonymous collective and broadcast the breach themselves.

In my opinion, most organisations should handle data breaches better. They need to inform the public as soon as they know of the breach so that their affected users can take the required actions as soon as possible.

Woo Themes – a South African start-up – suffered a particularly devastating malicious attack in 2012 (Blast, 2012). They lost all their data including back-ups (Blast, 2012; Chandler, 2014; Haver, 2015). They handled their breach very well, even managing to be commended by members of the public for how they dealt with the situation. The key aspect here was constant communication with the public.

Cost of Data Breaches to South African Companies

The Ponemon Institute (2017) conducted benchmark research sponsored by IBM on the cost of data loss to South African companies in 2016 (IBM, 2016). The research was performed by means of interviews conducted with members of 19 different companies over a ten-month period (Ponemon Institute, 2017). The results of the research set the average total cost of a data breach at 28.6 million Rand with the average cost of a single lost or stolen record set at 1,548 Rand (Ponemon Institute, 2017). It is notable that the research did not include any breaches that exceeded the loss of 100,000 records as this would have skewed the results (Ponemon Institute, 2017).

Digital Foundation (2017) cites the same Ponemon Institute research and reports the total cost of data breaches to the 19 companies involved as being 1.8 million US Dollars. The Ponemon research itself (as stated above) lists this same cost as 28.6 million Rand. Looking at the exchange rate between the South Afrcan Rand and the US Dollar at the time the article was posted (2 February 2017) which was R13.36 per US Dollar, this gives us a total of 24,048,000 Rand (XE, 2017A). That’s a difference of about four million Rand.

ITweb cites the Ponemon research as well and provides the figures in Rand matching what the Ponemon research says (Moyo, 2016).

Internet Solutions (2017) also refers to the Ponemon research and gives a cost of 1.87 million US Dollars per data breach. The article was published on 15 February 2017 and with the exchange rate at the time being R12.96 per US Dollar, we get a total of 24,235,200 Rand (XE, 2017B). This, too, differs from the Ponemon Institute number by about four million Rand.

What we can conclude from this is that when reporting values in changing currencies, it is best to stick with the currency of the country that you are referring to – especially when dealing with such large values where small changes in the exchange rate can result in large differences in the numbers involved. The numbers from the Ponemon Institute research (2016) are more reliable than any other numbers found, as they work off of actual data from South African companies and not estimations.

Edited by:

Faris Šehović

References

Continue reading